September 16th, 2015

co-authored with Zdanna Tranby, Science Museum of Minnesota

The NSF-funded Building Informal Science Education (BISE, NSF award #1010924) project brought together a team of evaluators and researchers to investigate how the rich collection of evaluation reports posted to InformalScience.org could be used to advance understanding of the trends and methods used in the evaluation of informal education projects. As the BISE project team began coding reports and synthesizing findings, we noticed some reports lacked what we felt was information necessary to understand how an evaluation was carried out, and to understand the context of the findings. In the process, an overarching question about evaluation reporting practices emerged. If evaluators working in the informal STEM learning field want to learn from evaluation reports on InformalScience.org and similar types of repositories, how can evaluators help to ensure the reports they post are useful to this audience? The evaluation literature provides guidance on what reports might look like for primary intended users (those who funded or ran the program) and other key stakeholders of an evaluation, but lacks guidance specific to evaluator audiences. To answer this question we analyzed what was included and lacking in evaluation reports on InformalScience.org. Our analysis led to a series of guiding questions for evaluators to consider when preparing reports to share with other evaluators on sites such as InformalScience.org. The full paper that this blog is based on can be found on the BISE website.

Method

The BISE team created an extensive coding framework that has 32 different coding categories, of which 10 categories, or report elements, were used to answer our research question (see table below).

| Coding category | Definition |

|---|---|

| Evaluand | The object(s) being evaluated. In the case of this report the evaluand refers to such objects as exhibitions, broadcast media, websites, public programs, professional development, audience studies, etc. A full list of evaluands can be found on page 6 of the coding framework. |

| Project setting | The location of the project being evaluated, such as an institution, home, or type of media (ex. aquarium, library, nature center, school, television, computer, etc.). |

| Evaluation type | The type of evaluation, such as formative or summative. |

| Evaluation purpose | The focus of the evaluation, which includes the evaluation questions. |

| Data collection methods | The method(s) used to collect data for the evaluation (ex. focus group, interview, observation, web analytics,etc.). |

| Type of instrument(s) included in the report | The type of data collection instrument(s), if included with the report (ex. focus group protocol, interview protocol, observation instrument, etc.). |

| Sample for the evaluation | The types of individuals that composed the sample or samples of the evaluation, such as the general public, specific age groups, or adult-child groups. |

| Sample size | The description of sample size for each data collection method. |

| Statistical tests | The statistical tests used for the data analysis, such as t-tests, correlation, ANOVA, and if no tests were used. |

| Recommendations | The inclusion of evaluator-generated recommendations based on the evaluation data. |

For our sample we analyzed 520 evaluation reports, all reports written and submitted to InformalScience.org from 1990 through June 1, 2013. The reports were uploaded into the qualitative analysis software, NVivo, and coded based on the BISE Coding Framework.

Results & Discussion

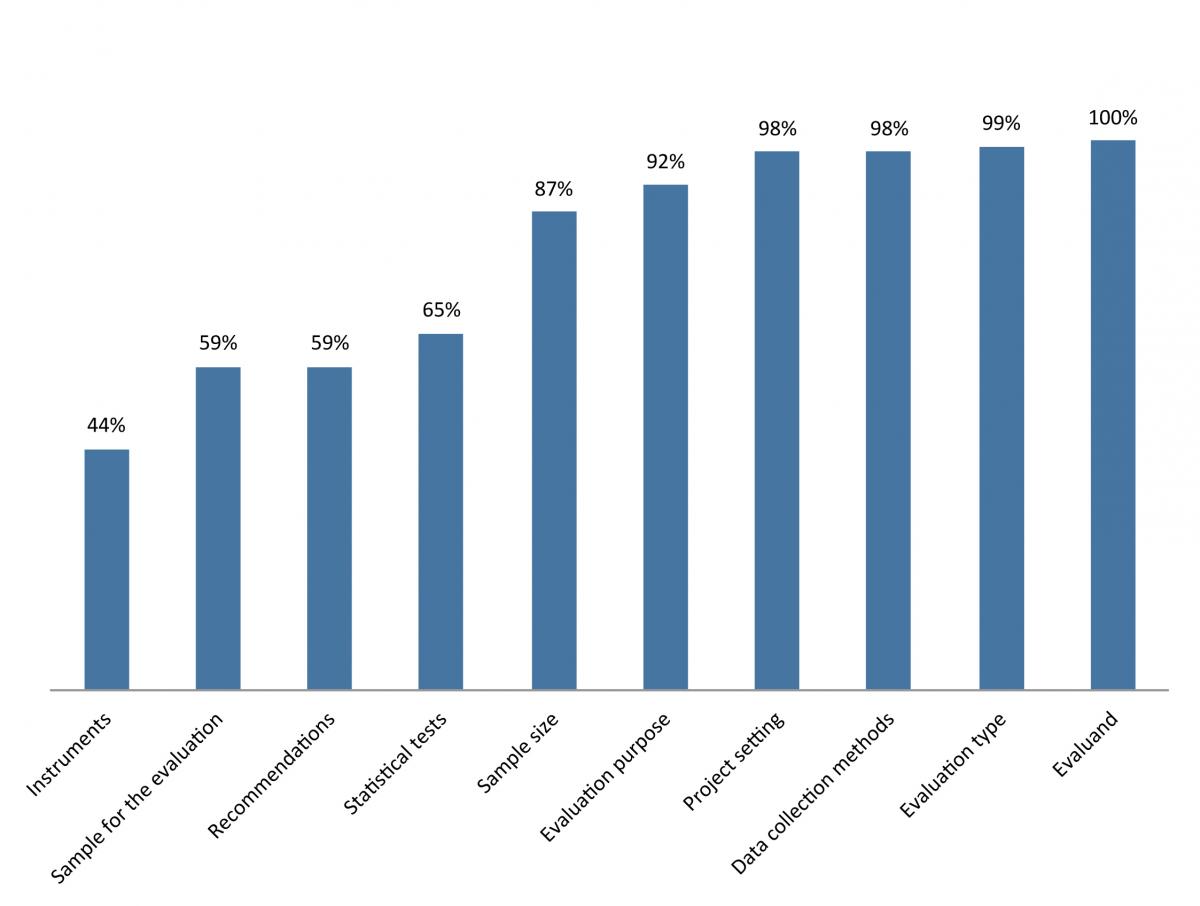

The information included in the evaluation reports of informal learning experiences was highly variable. As illustrated in Figure 1, some report elements were frequently included in reports, while other elements were sometimes missing. The evaluand was the only report element clearly described in all 520 reports. Almost all of the reports described the project setting, the type of evaluation, and the data collection methods used in a study. The evaluation purpose and sample size were included in a majority of the reports, but they were both features that some reports lacked. Although 92% of evaluation reports described the purpose of the evaluation, only 47% included the evaluation questions used. Report elements most often lacking were the data collection instruments, description of the sample for the evaluation, description of statistical tests used, and inclusion of recommendations.

Figure 1. Percent of evaluation reports on informalscience.org that included various reporting elements (n = 520)

Data collection instruments were missing in over half of the reports. Posting reports without instruments can limit the report’s utility for other evaluators. Instruments are valuable tools evaluators use to understand how evaluation data are gathered and to judge the quality of the analyses. Instruments, such as surveys and interview protocols, help readers understand what questions were asked of the evaluation sample, how the questions were asked, and thus how the reported results relate to the data collection method.

Over two-fifths of reports (41%) did not describe the sample for at least one of the data collection methods used in an evaluation. A common occurrence throughout the reports was the use of vague descriptors for the sample, such as “users” and “visitors,” without elaborating on what those terms actually meant. This makes it difficult to interpret an evaluation’s results and understand what groups the findings apply to.

Of the reports that used statistics, over a third (35%) said that results were “significant,” but did not specify what test was used. Without indicating the test, the reader cannot judge the appropriateness of the statistical test, the quality of the analysis, or accuracy of the interpretations. When the reader knows what statistical analysis was conducted, he/she can better engage with the data and arguments presented in a report. Another issue was the use of the word “significant” on its own, instead of saying “statistically significant.” If a report states that results were “significant” instead of saying “statistically significant,” readers cannot be certain if the significance is actually related to a statistical finding.

Recommendations were not included in over two-fifths of the evaluation reports (41%), and their occurrence varied by type of evaluation. There is no way to know from the evaluation reports if recommendations were actually generated as part of the evaluation process; all that is known is how often they were reported. If evaluators do provide recommendations as part of an evaluation, including them in reports they post to InformalScience.org can be useful to evaluators and practitioners. For instance, the usefulness of recommendations for the informal learning field is exemplified in a BISE synthesis paper by Beverly Serrell that examines what lessons can be learned about exhibition development from synthesizing recommendations in exhibition summative evaluation reports.

Conclusion

With the increase in avenues for sharing evaluation reports online, how can evaluators help to ensure the reports they post are useful to other evaluators? By exploring what is available in reports posted on InformalScience.org and reflecting on reporting practices, our analysis brought to light where reports in the field of informal education evaluation may already meet the needs of an evaluator audience and where reports are lacking. To help maximize learning and use across the evaluation community, we developed guiding questions evaluators can ask themselves as they prepare a report.

Guiding questions for preparing reports for an evaluator audience

- Have I described the project setting in a way that others will be able to clearly understand the context of the project being evaluated?

- Is the subject area of the project or evaluand adequately described?

- Have I identified the type of evaluation?

- Is the purpose of the evaluation clear?

- If I used evaluation questions as part of my evaluation process, have I included them in the report?

- Have I described the data collection methods?

- If possible, can I include data collection instruments in the report?

- Do I provide sufficient information about the sample characteristics? If I used general terms such as “visitors,” “general public,” or “users,” do I define what ages of individuals are included in that sample?

- Have I reported sample size for each of my data collection methods?

- If I report statistically significant findings, have I noted the statistical test(s) used? Do I only use the word “significant” if referring to statistically significant findings?

- If I provided recommendations to the client, did I include them in the report?

These questions can serve as a checklist for evaluators preparing a report that will be useful for their evaluator colleagues. The simple addition of a few technical details to an evaluation report narrative, or a longer technical appendix can help maximize the contributions of a report to the growing knowledge of the informal education evaluation community and the larger evaluation field.