January 21st, 2019

On August 23 and 24, 2018, the Center for Advancement of Informal Science Education (CAISE) Evaluation and Measurement Task Force brought together a small group of informal STEM education (ISE) and science communication (SciComm) professionals. Generously hosted by the Howard Hughes Medical Institute in Chevy Chase, Maryland, the convening was designed to open up the Task Force’s process and products to a diverse group of critical colleagues. Participants shared and compared the needs, challenges, and opportunities for building collective knowledge around measuring and evaluating the outcomes and impacts of STEM engagement efforts.

The attendees included researchers who conduct or are curious about evaluation, professional evaluators who work with both practitioners and researchers, representatives of practitioner communities who need practical tools in their everyday work, and “evaluation stakeholders” who require evidence and data to make decisions.

Common Issues Between ISE and SciComm

From the wide and deep experience across ISE and SciComm in the room, a set of common interests and issues emerged:

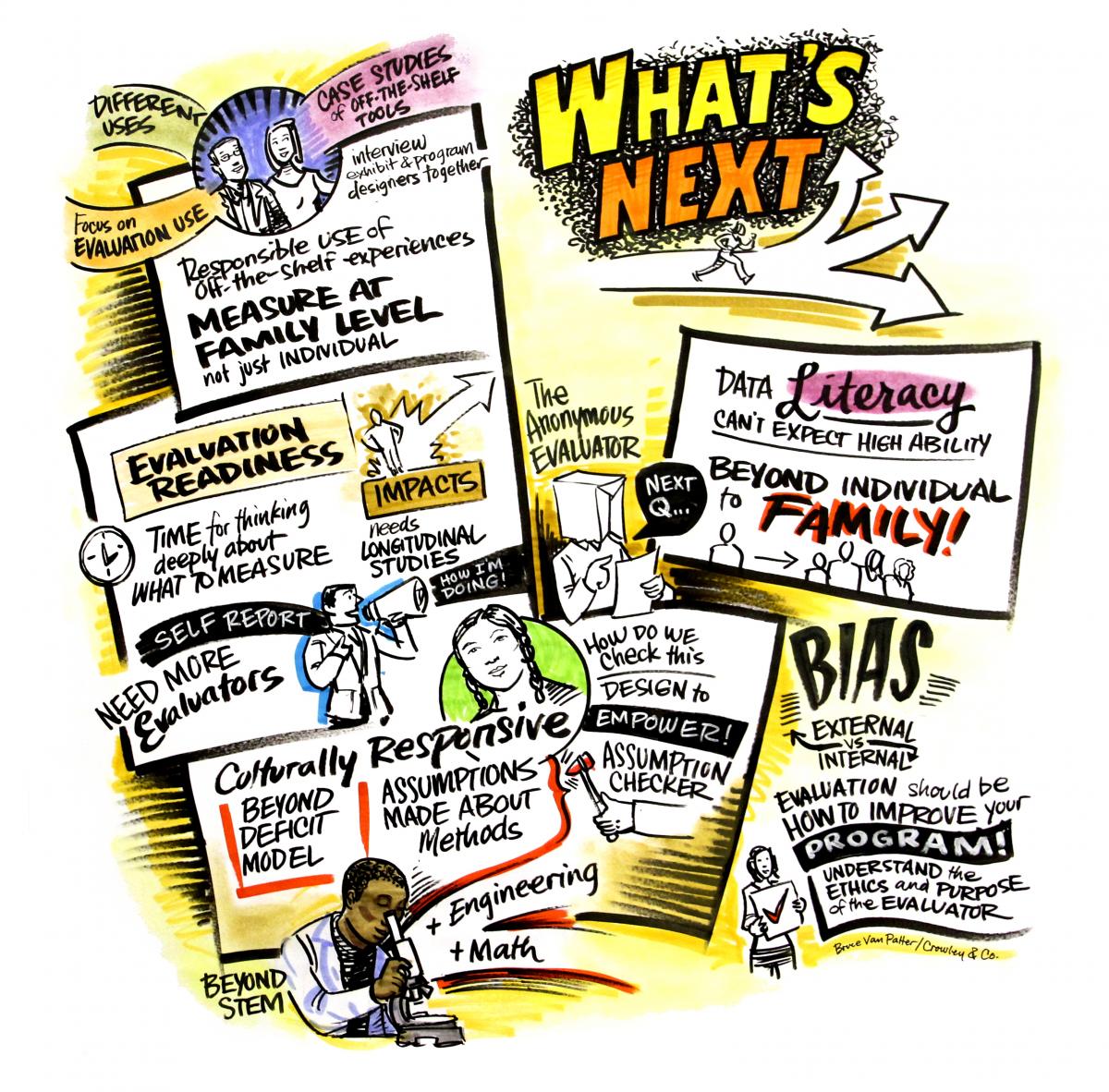

Evaluating and measuring innovative learning and engagement settings. Social media and podcasts are two examples of “innovative settings.” While an increasing amount of public engagement with STEM occurs online, the outcomes of these experiences are difficult to evaluate, as it is difficult for evaluators to contact the audience. Trolls and bots add a confusing layer to the data. Often, social media “reach” (i.e., retweets, comments, etc.) is used as a proxy for impact, but it does not enable the kind of rigorous assessment that evaluators and researchers desire. One example shared was the Brains On! podcast; the project evaluator learned that over 90% of the audience listens as a family in the car, making their interactions around the content virtually impossible to evaluate unobtrusively.

Innovative methods in data collection. The attendees at the convening also noted that new and innovative methods of data collection are being used by researchers and evaluators in the field. For example, researchers have used technologies such as sensors, GPS tracking, and eye-tracking cameras in museums and other informal settings for learning in order to understand visitors’ behaviors, engagement, and attention. There are also new technologies that can help automate data transcription and analysis. SciComm attendees noted the potential application of these innovations in their field, such as using similar technology to collect real-time data and provide immediate feedback to scientists and other communicators who are engaged with the public.

Attending to equity in evaluation. The importance of broadening participation efforts in STEM led to discussions about the extent to which ISE evaluations have attended closely to issues of culture, context and equity since it has implications for how evaluators design evaluations, collect data, conduct analyses, and interact with those being evaluated. The group raised several questions, including: How can evaluators design studies and instruments that take into account the dignity of each human and that empower participants? What does it mean to assess outcomes using an asset-based approach? How can evaluators consistently take the time to build relationships with communities in order to earn trust and access? And where is an outlet to document failures and lessons learned?

Evaluators and researchers across a range of fields are thinking deeply about cultural responsiveness, and participants agreed that ISE and SciComm could benefit benefit from listening to a broader range of voices outside their fields. Culturally responsive evaluation, which considers culture and context as central aspects of every step of the evaluation process, was raised as an example of an approach that has alignment with broadening participation goals. The importance of building evaluator cultural competence was also noted. There are a number of resources that could be useful such as the American Evaluation Association (AEA), which offers significant evaluation professional development and publications, the Center for Culturally Responsive Evaluation and Assessment, and the guide to conducting culturally responsive evaluation in the NSF 2010 User-Friendly Handbook for Project Evaluation.

|

| Our graphic recorder, Bruce Van Patter from Crowley & Co., captured a share-out of small group discussions of “What’s Next” for evaluation in ISE and SciComm. |

The importance of moving beyond individual-level impact to the family or community was also raised as an important consideration in efforts to broaden participation in STEM. Many ISE and SciComm projects focus on measures of impact at the individual level, and attendees discussed how to design for measuring impacts at the community and systems levels. How can metrics be developed for families, communities, or neighborhoods? An example is the Head Start on Engineering project which specifically looks at the family as a unit; researchers are finding it challenging to measure interest and engagement for the whole family (parents and children), particularly with the goal of surfacing implications for supporting the development of the child.

Building knowledge among practitioners regarding evaluation goals, designs, and use. Attendees who had worked with practitioners at national leadership or association levels, or who had been engaged by practitioners as professional evaluators, noted that increased knowledge about evaluation could improve satisfaction among both parties. Such knowledge includes an understanding that the primary goal of evaluation is to improve programs, familiarity with the ethics and purpose of a professional evaluator, knowledge of how to leverage them to meet programmatic goals, and data literacy. A few attendees noted that accomplishing these things requires a high level of professional development and resource allocation, which can be particularly challenging for nonprofit ISE organizations. Increased practitioner capacity in evaluation can be achieved, as evidenced in a recent pilot initiative from the National Girls Collaborative Project, but it requires substantial efforts.

Researchers at the convening also articulated a tension around practitioners adapting and using publicly available tools to fit their needs, particularly needs that do not exactly align with the intended purpose of the measurement tool. In addition, they expressed a desire to establish guidelines around responsible use of off-the-shelf methodologies and instruments. As a starting point, better communication is required between those who design ISE or SciComm experiences and those who research and design the measures. Case studies of how teams of experienced designers and evaluators have worked together might be valuable, particularly if they show a behind-the-scenes conversation about what the researchers learned in the process.

Evaluating SciComm and Public Engagement with Science Events

Attendees from the SciComm community acknowledged a desire for additional knowledge and support around evaluation in their field. Within the SciComm community, some research is not connected to practice (or not as connected as in ISE). One challenge may be that, ironically, researchers face academic pressure to develop and test theory rather than ensuring that their research has societal impact. Also, they typically focus on topics such as media content or the dynamics of public opinion. If SciComm is truly a two-way dialogue, then scientists should also be an “audience” for evaluation and research.

One important development presented at the convening was the Theory of Change for Public Engagement with Science document from the American Association for the Advancement of Science (AAAS), which includes a logic model that designers of experiences can use as they develop activities and settings.

Informing New CAISE Resources

A primary task of the CAISE Evaluation and Measurement Task Force is to develop resources that add texture and nuance to the understanding and use of constructs and measures across both the ISE and SciComm fields. The task force identified three common constructs of interest and study: (1) identity, (2) interest, and (3) engagement. For each construct, they asked a sample of STEM education researchers, science communication scholars, social psychologists, learning scientists, and informal STEM educators to share their thinking and work.

At the time of the convening, the CAISE interview series on STEM identity was in a “soft launch” phase, and the convening offered a unique opportunity to open CAISE’s resource development process to a wider group of colleagues and stakeholders for input. The feedback from the group provided critical guidance for the forthcoming interview series on interest and engagement. Both sets of video clips, interview summaries, and other supporting resources are in development and are scheduled for release in early 2019.

Conclusions and Next Steps

As any productive convening typically does, this gathering made progress toward addressing the initial questions but generated at least as many new questions. Participants identified several areas that need further investigation and development, including supporting the use of evaluation, bridging the understanding between practitioners and evaluators, and finding common ground between the ISE and SciComm fields. The discussions and presentations at the convening informed CAISE’s thinking and directions for the future, particularly regarding how to make CAISE’s work complement the efforts of others who are building capacity to evaluate and measure lifelong STEM engagement activities going forward.

Attendees and Task Force Members

CAISE thanks all those who contributed their time and expertise during the convening. The attendees who were invited included:

- Rashada Alexander, National Institutes of Health

- Ryan Auster, Museum of Science, Boston

- Tony Beck, National Institutes of Health

- Marjee Chmiel, Howard Hughes Medical Institute

- Emily Therese Cloyd, American Association for the Advancement of Science

- Cecilia Garibay, Garibay Group

- Al DeSena, National Science Foundation

- Margaret Glass, Association of Science-Technology Centers

- Leslie Goodyear, Education Development Center

- Josh Gutwill, Exploratorium*

- Eve Klein, Portal to the Public

- Boyana Konforti, Howard Hughes Medical Institute

- Leilah Lyons, New York Hall of Science

- Lesley Markham, Association of Science-Technology Centers

- Ellen McCallie, National Science Foundation

- Patricia Montano, Denver Evaluation Network

- Karen Peterman, Peterman Consulting

- Karen Peterson, National Girls Collaborative Project

- Andrew Quon, Howard Hughes Medical Institute

- Katherine Rowan, George Mason University*

- Sarah Simmons, Howard Hughes Medical Institute

- Brooke Smith, Kavli Foundation

- Gina Svarovsky, University of Notre Dame

- Sara K. Yeo, University of Utah

The members of the CAISE Evaluation and Measurement Task Force are:

- Jamie Bell, CAISE

- John Besley, Michigan State University

- Mac Cannady, Lawrence Hall of Science, Berkeley

- Kevin Crowley, University of Pittsburgh

- Amy Grack Nelson, Science Museum of Minnesota

- Tina Phillips, Cornell Lab of Ornithology

- Kelly Riedinger, Oregon State University

- Martin Storksdieck, Oregon State University*

* Unable to attend.