June 17th, 2022

AUTHORS: Deborah Wasserman, Colleen Popson, and Laura Weiss

Informal STEM learning institutions, educators, and evaluators face a unique challenge. Each institution sponsors a wide array of programming for all sorts of learners. Educational interactions can range from two- or three-minute interactions to multi-year intensive programming. An educator with a hands-on activity cart in a museum hallway may be interacting with a group of unrelated visitors who span ages from toddlers to seniors. In the next room there may be a group of high school seniors who have been engaged since seventh grade in a career ladder program. Across this programming spectrum, contact time, and learners, how do we plan and evaluate these programs in a way that is useful to the program providers while contributing to a single understanding of institutional impact?

In a review of informal STEM education evaluation, Allen and Peterman (2019) challenged the field to “design methods that characterize the impact of a STEM resource or program not just as a stand-alone offering, but in terms of its capacity to support and connect to other experiences, resources, and programs in the ecosystem” (p. 29–-30). The Center of Science and Industry (COSI) Center for Research and Evaluation and the National Museum of Natural History (NMNH) have been developing a Strategic Outcomes Framework that addresses these challenges. After incorporating recommendations and feedback from an NSF-funded conference organized in April 2021 by COSI, NMNH, and PEAR (NSF #2039209) involving educators, practitioners, and content knowledge scholars, the framework is becoming a useful tool. Over a 10-day period, participants explored the framework’s potential and challenged its limits. They reviewed and contributed to the soundness and utility of the outcome categorizations as well as the outcome types within them.

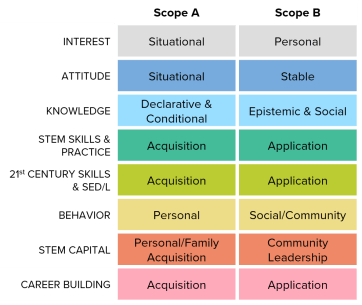

The Strategic Outcomes Framework builds on informal learning categories and strands (Bell et al., 2009; Friedman, 2008) by delineating realistic outcome expectations based on dosage and complexity of programming. In its simplest form (Figure 1), each category of outcome divides into two scopes.

Figure 1. Simplified Strategic Outcomes Framework

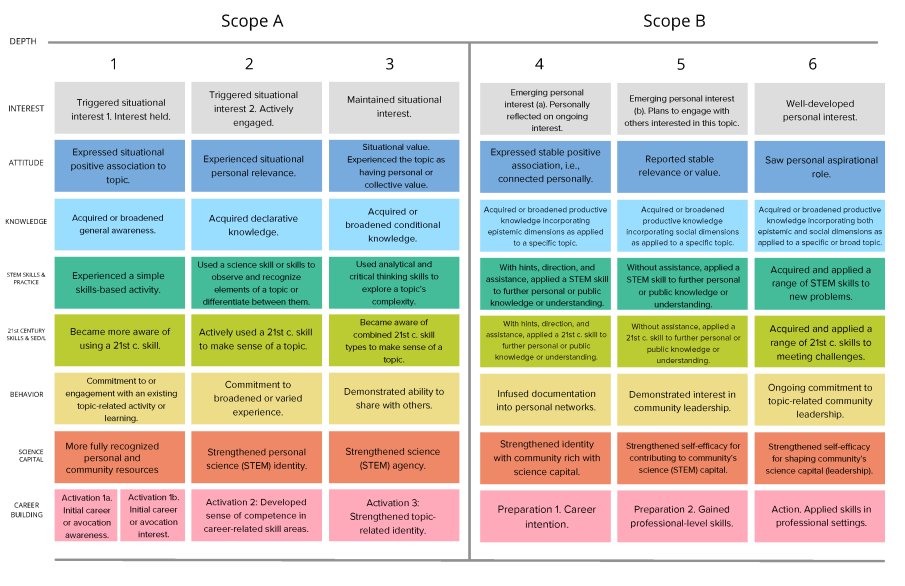

The more refined framework (Figure 2) divides each scope into outcome types. These depth levels and outcome types were based on research within each of the disciplines (see resources below) and feedback from conference scholars representing each discipline.

Figure 2. Refined Strategic Outcomes Framework

Specifically, the Framework’s outcomes exist within eight categories: interest, attitude, knowledge, STEM skills, 21st century skills & social emotional development and learning (SED/L), behavior, STEM capital, and career activation and preparation. Each category includes roughly six outcome types that can be mapped to programming that ranges from short duration with simple content to extended duration with complex content. Once the category has been selected, a program designer uses the outcome type’s suggested indicator statement to specify an outcome for the program’s participants. For example, a program aimed at having participants learn and apply a STEM skill (21st Century Skills 2) might specify that outcome as “Participants in this program actively used critical thinking skills to select resources for making decisions about their health.”

Application of these outcomes involves some important principles and guidelines. First, a program rarely targets a single outcome. Instead, program design derives from outcome combinations, each of which leads to different educational strategies. These combinations can occur both between and within categories. Programs can use the Framework for primary and supporting outcomes. For example, a program with the primary outcome of creating a behavior change may have supporting attitude, knowledge, and skill outcomes that participants need to be able to make that change. A program also may be defined in terms of expected and “reach” outcomes. For example, all participants in a workshop may be expected to report that they experienced situational interest, and the workshop also has the goal that some of those participants will develop personal interest.

Moreover, outcome types and even depth levels are value free. A program that targets a simple, less complex outcome can be equally valuable as one that targets deeper, more complex outcomes. Second, outcome depth does not necessarily correlate with developmental age or learner academic standing. Conceptually, given the time and creative programming strategy, some level six outcomes can be targeted even in preschool settings.

Another principle is that the Framework must be nested within a system that acknowledges the voices of value holders (aka stakeholders) while selecting, defining, and strategizing toward achieving these outcomes. We have nested the Framework within what we call “Voices and Choices Planning for Self Determination.” This process, based on Peter Checkland’s soft systems approach (Checkland and Poulter, 2006), recognizes that each value holder brings to the planning process an acknowledged worldview and set of environmental constraints or context. Each value holder also assumes the roles of customer (who benefits), owner (who has the power to start and stop the program), and change agent (who selects and implements the strategies that lead to outcomes). Discussions of these varied conditions and perspectives lead to Framework use that honors and empowers value holder voices which are often overlooked in the program planning process.

Both the Framework and the self-determination process can be employed at various program stages. At the institution level, they can help determine how all the programs align with each other and the institutional mission. At the individual program level, they can be used during design and planning, promotion and distribution, implementation, and evaluation.

Another principle is that the Strategic Outcomes Framework functions alongside a similar educational environment objectives framework that addresses cognitive, affective, and physical learning conditions as separate from outcomes.

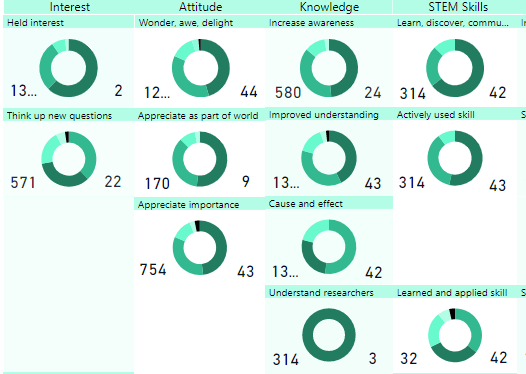

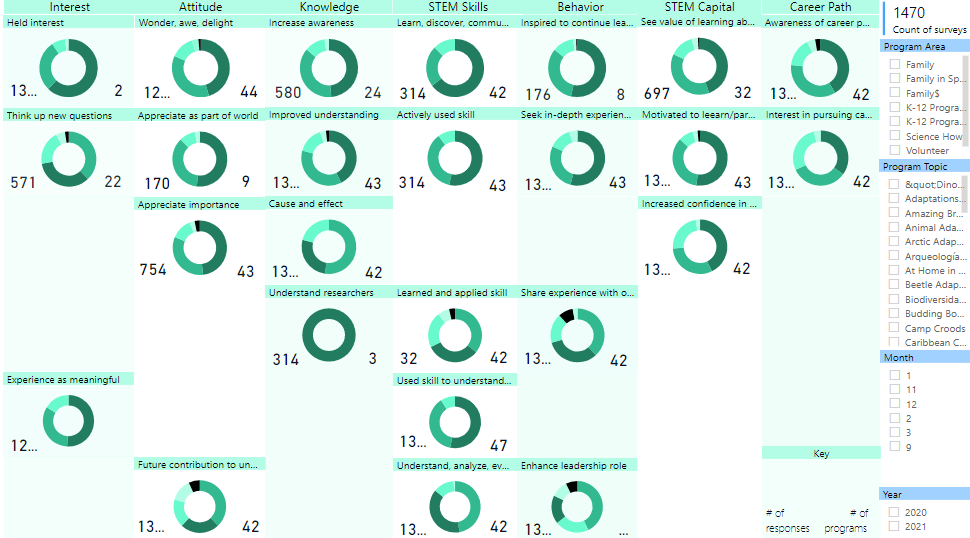

With these assumptions, the Framework has the potential to provide informal education institutions with a tool for both program-level and institution-level strategic educational planning and evaluation. As an example of how it informs institution-level planning, consider a report the Framework can produce (Figure 3). In this figure, donut charts illustrate the range of outcomes addressed by an institution’s programs. The darker shading represents more successful outcome achievement. The number to the upper right of each donut shows how many programs contributed to the donut; the number to the lower left represents the number of responses. In this example, note that, across the outcome categories, levels 1 and 2 are well targeted in this institution—though the more complex levels have not been as well achieved.

Figure 3. Institution-wide Report

Benefits of the Strategic Outcomes Framework

Early users have reported that the Framework:

- Promotes important dialogue at each program phase

- Aids in strategic planning

- Helps to articulate an overview of institutional educational intentions

- Parses learning outcomes to realistically reflect contact time and experience

- Focuses program planning

- Builds practitioner capacity

- Builds evaluation capacity

- Aids in communication to and collaboration with value holders (aka stakeholders)

- Creates a full context for learning

- Provides language and data for communicating impact

Some caveats

On the other hand, the Framework comes with some important limitations and challenges to solve. Among these are:

- Responsivity to cultural diversity, equity, accessibility, inclusiveness and voice

- Reinforcing the “authority” of science as constructed by exclusive science community membership

- Accounting for outcomes not in the framework: e.g., social justice and system change

- Focus on acquisition and assimilation; what about transformation?

- How to account for constructivist, serendipitous outcomes

Future work

We continue to develop and pilot test this Framework and the broader system, and are learning more with each collaborator. We are very interested in having others use the Framework and provide feedback to us, knowing that future use will include validity testing. Contact us and be part of a conversation about how to use the Framework.

References

Allen, S., & Peterman, K. (2019). Evaluating Informal STEM Education: Issues and Challenges in Context. New Directions for Evaluation, 2019(161), 17–33. https://doi.org/10.1002/EV.20354

Bell, P., Lewenstein, B., Shouse, A. W., & Feder, M. A. (2009). Learning science in informal environments: People, places, and pursuits (P. Bell, B. Lewenstein, A. W. Shouse, & M. A. Feder (eds.)). The National Academies Press. http://books.google.com/books?hl=en&lr=&id=vdOIlY7WdJUC&oi=fnd&pg=PT1&dq=informal+education+exhibit+influence+on+behavior&ots=PQ_InA5U8t&sig=mj1aviIrKNkc1cIRd4g8TCdgRG0

Checkland, P., & Poulter, J. (2006). Learning For Action: A Short Definitive Account of Soft Learning For Action. John Wiley & Sons, Ltd., West Sussex England.

Friedman, A. J. (Ed.). (2008). Framework for Evaluating Impacts of Informal Science Education Projects | InformalScience.org. https://www.informalscience.org/framework-evaluating- impacts-informal-science-education-projects

References by outcome category

Interest

Hidi, S., & Renninger, K. A. (2006). The Four-Phase Model of Interest Development. Educational Psychologist, 41(2), 111–127.

Conference scholar: Anne Renninger, Swarthmore College

Attitude

Bohner, G., & Dickel, N. (2011). Attitudes and attitude change. Annual Review of Psychology, 62, 391–417. https://doi.org/10.1146/annurev.psych.121208.131609

Sinatra, G. M., Kienhues, D., & Hofer, B. K. (2014). Addressing Challenges to Public Understanding of Science: Epistemic Cognition, Motivated Reasoning, and Conceptual Change. https://Doi.Org/10.1080/00461520.2014.916216, 49(2), 123–138. https://doi.org/10.1080/00461520.2014.916216

Conference scholar: Cameron Denson, North Carolina State University; Mwenda Kudumu, North Carolina State University

Knowledge

Berland, L. K., Schwarz, C. V., Krist, C., Kenyon, L., Lo, A. S., & Reiser, B. J. (2016). Epistemologies in practice: Making scientific practices meaningful for students. Journal of Research in Science Teaching, 53(7), 1082–1112. https://doi.org/10.1002/tea.21257

Carlone, H. B., Mercier, A. K., & Metzger, S. R. (2021). The Production of Epistemic Culture and Agency during a First-Grade Engineering Design Unit in an Urban Emergent School. Journal of Pre-College Engineering Education Research (J-PEER), 11(1), 10. https://doi.org/10.7771/2157-9288.1295

Duschl, R. (2008). Science Education in Three-Part Harmony: Balancing Conceptual, Epistemic, and Social Learning Goals: http://Dx.Doi.Org/10.3102/0091732X07309371, 32, 268–291. https://doi.org/10.3102/0091732X07309371

Lau, M., & Sikorski, T. R. (2018). Dimensions of Science Promoted in Museum Experiences for Teachers. Journal of Science Teacher Education, 29(7), 578–599. https://doi.org/10.1080/1046560X.2018.1483688

McGill, T., & Volet, S. (1997). A conceptual framework for analyzing students’ knowledge of programming. Journal of Research on Computing in Education, 29(3), 276. https://www.tandfonline.com/doi/abs/10.1080/08886504.1997.10782199

Pierson, A. E., & Clark, D. B. (2018). Engaging students in computational modeling: The role of an external audience in shaping conceptual learning, model quality, and classroom discourse. Science Education, 102(6), 1336–1362. https://doi.org/10.1002/SCE.21476

Sinatra, G. M., Kienhues, D., & Hofer, B. K. (2014). Addressing Challenges to Public Understanding of Science: Epistemic Cognition, Motivated Reasoning, and Conceptual Change. https://Doi.Org/10.1080/00461520.2014.916216, 49(2), 123–138. https://doi.org/10.1080/00461520.2014.916216

Yildirim, Z., Ozden, M. Y., & Aksu, M. (2001). Comparison of hypermedia learning and traditional instruction on knowledge acquisition and retention. Journal of Educational Research, 94(4), 207–214. https://doi.org/10.1080/00220670109598754

Conference scholar: Tiffany Sikorski, George Washington University

STEM Skills

Anderson, L., & Sosniak, L. (1994). Bloom’s Taxonomy. University of Chicago Press.

Landry, J. P., Longnecker, H. E., Haigood, B., & Feinstein, D. L. (2000). Comparing Entry-Level Skill Depths Across Information Systems Job Types: Perceptions of IS Faculty: Americas Conference on Information Systems, 1968–1972.

Conference scholars: Angela Calabrese Barton, Michigan State University; Edna Tan, University of North Carolina, Greensboro

21st Century Skills

Anderson, L., & Sosniak, L. (1994). Bloom’s Taxonomy. University of Chicago Press.

Armstrong, P. (2016). Bloom’s Taxonomy Background Information. Center for Teaching, Vanderbilt University. https://programs.caringsafely.org/wp-content/uploads/2019/05/Caring-Safely-Professional-Program-Course-Development.pdf

Landry, J. P., Longnecker, H. E., Haigood, B., & Feinstein, D. L. (2000). Comparing Entry-Level Skill Depths Across Information Systems Job Types: Perceptions of IS Faculty: Americas Conference on Information Systems, 1968–1972.

Zeidler, D. L. (2016). STEM education: A deficit framework for the twenty-first century? A sociocultural socioscientific response. Cultural Studies of Science Education, 11(1), 11–26. https://doi.org/10.1007/s11422-014-9578-z

Conference scholar: Dana Zeidler, University of South Florida

Behavior

Allen, S., Campbell, P. B., Dierking, L. D., Flagg, B. N., Alan, J. F., Garibay, C., Korn, R., Silverstein, G., & Ucko, D. A. (2008). Framework for evaluating impacts of informal science education projects: report from a national science foundation workshop (A. J. Friedman (Ed.)).

Dierking, L. D. (2008). Evidence and categories of ISE impacts. In J. Friedman, Alan (Ed.), Framework for evaluating impacts of informal science education projects. National Science Foundation Directorate for Education and Human Resources DRL.

Heimlich, J. E., & Ardoin, N. M. (2008). Understanding behavior to understand behavior change: A literature review. Environmental Education Research, 14(3), 215–237.

Heimlich, J. E., Mony, P., & Yocco, V. (2013). Belief to Behavior: A Vital Link. In International Handbook of Research on Environmental Education (pp. 262–274). Routledge.

Conference Scholar: Joe Heimlich, COSI Center for Research & Evaluation

STEM Capital

Archer, L., Dawson, E., DeWitt, J., Seakins, A., & Wong, B. (2015). “Science capital”: A conceptual, methodological, and empirical argument for extending bourdieusian notions of capital beyond the arts. Journal of Research in Science Teaching, 52, 922–948.

Basu, S. J., Calabrese-Barton, A., & Tan, E. (Eds.). (2011). Democratic Science Teaching: Building the Expertise to Empower Low-income Minority Youth in Science (Vol. 3). Sense Publishers.

Carlone, H. B., & Johnson, A. (2007). Understanding the science experiences of successful women of color: Science identity as an analytic lens. Journal of Research in Science Teaching, 44(8), 1187–1218.

DeWitt, J., Archer, L., & Mau, A. (2016). Dimensions of science capital: exploring its potential for understanding students’ science participation. International Journal of Science Education, 38(16), 2431–2449. https://doi.org/10.1080/09500693.2016.1248520

Godec, S., King, H., & Archer, L. (2017). The Science Capital Teaching Approach: engaging students with science, promoting social justice. London: University College London.

Conference scholar: Spela Godec, University College London

Career Building

Byars-Winston, A., Gutierrez, B., Topp, S., & Carnes, M. (2011). Integrating theory and practice to increase scientific workforce diversity: a framework for career development in graduate research training. CBE—Life Sciences Education, 10(4), 357–367. https://doi.org/10.1187/cbe.10-12-0145

Hernandez, P. R., Woodcock, A., Estrada, M., & Schultz, P. W. (2018). Undergraduate research experiences broaden diversity in the scientific workforce. BioScience, 68(3), 204–211. https://doi.org/10.1093/biosci/bix163

Tai, R. H., Kong, X., Mitchell, C. E., Dabney, K. P., Read, D. M., Jeffe, D. B., Andriole, D. A., & Wathington, H. D. (2017). Examining summer laboratory research apprenticeships for high school students as a factor in entry to MD/PhD programs at matriculation. CBE—Life Sciences Education, 16(2), ar37. https://doi.org/10.1187/cbe.15-07-0161

Conference scholar: Robert Tai, University of Virginia